HowTo: Use TensorFlow Inception V3 to train image recognition model and generate an inference engine

Deep learning is divided into two stages: Training and Inference. The former requires countless computing on a large amount of data to train and generate models, while the latter provides identification services by using model. the model.

Model training requires a lot of computing resources to obtain a model with a good identification accuracy. TWSC provides you with a container solution that uses GPU resources for computing and can quickly generate models.

This article will demonstrate step by step how to uses TWSC Interactive Container, through GPU resources[1][2] and with the collocation of default storage system –Hyper File System (HFS) as a storage space for training data and models, using TensorFlow Inception V3 convolutional neural network architecture, and CIFAR-10 dataset to run the model training of cat and dog image recognition, generate an inference engine, and provide external image recognition services.

- [1] You can create a container with up to 8 GPUs. For more information, please refer to Specifications and pricing.

- [2] If you need to use resources with more than 8 GPUs, you can use the service of TWSC Taiwania 2 (HPC CLI) to finish the work.

Part 1. Image recognition model training

Step 1. Sign in TWSC

If you don’t have an account yet, please refer to Sign up for TWSC.

Step 2. Create a TWSC Interactive Container

Please refer to Interactive Container and based on the following settings, create an Interactive Container:

Image type: TensorFlow

Image version: tensorflow-23.05-tf2-py3:latest

Basic Configuration: type c.super

Step 3. Connect to the container, download the training program

- Refer to Connection methods, and using Jupyter Notebook or SSH to connect to the default storage space of the container.

- Enter the following command to download the framework program of TWCC GitHub Inception v3 for image recognition model training to the container.

git clone https://github.com/TWCC/AI-Services.git

Step 4. Conduct AI model training

- Enter the "Tutorial_Three" directory

cd AI-Services/Tutorial_Three

- Perform model training

bash V3_training.sh --path ./cifar-10-python.tar.gz

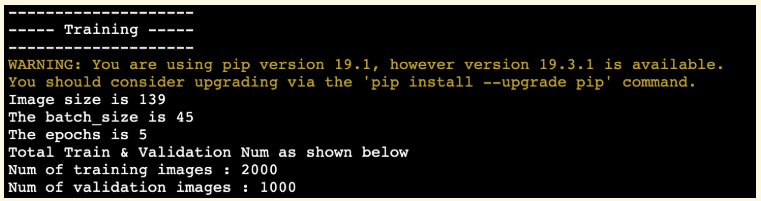

- The following message in the terminal indicates that the training job is about to start.

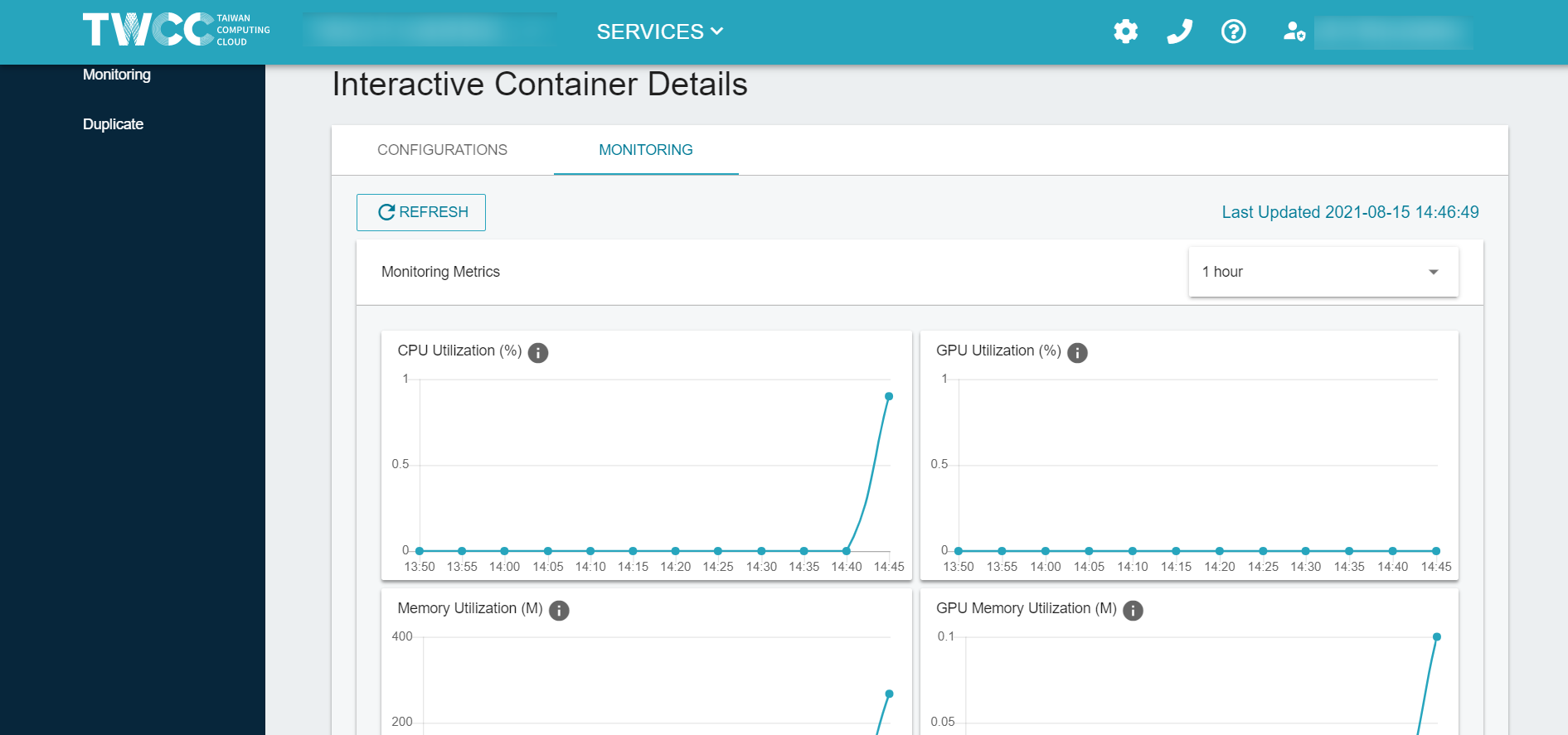

- During the training, you can view the resource usage of CPU/GPU, memory, and network on the "Interactive Container Details" page

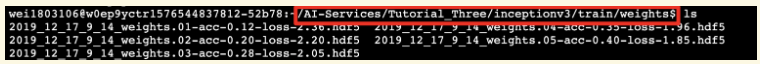

- After the model training job completes, the model will be stored in the path:

AI-Services/Tutorial_Three/inceptionv3/train/weights

Part 2. Build an inference engine

The following tutorial demonstrates how to use the trained model to build an inference engine and provide web services for image recognition.

Step 1. Reconnect to the container

Please connect to a container again.

Step 2. Build an inference engine

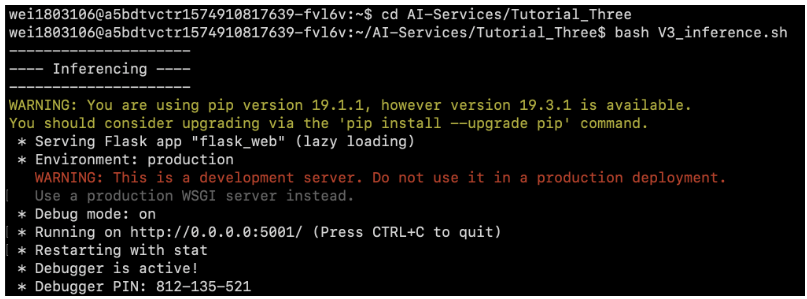

- Enter the Tutorial_Three directory

cd AI-Services/Tutorial_Three

- Enable the AI inference engine service

bash V3_inference.sh

Step 3. Image recognition website

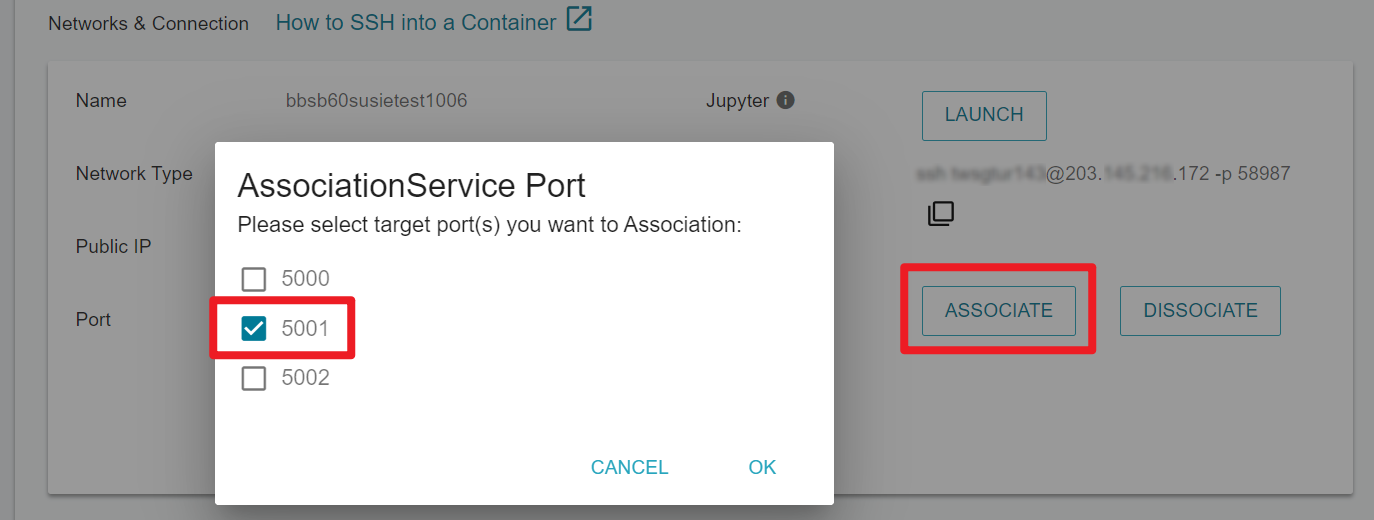

- Please go to the detailed information page of the Interactive Container, first➡️ click on the Associate service port ➡️ select 5001 ➡️ click OK to open the HTTP web service endpoint

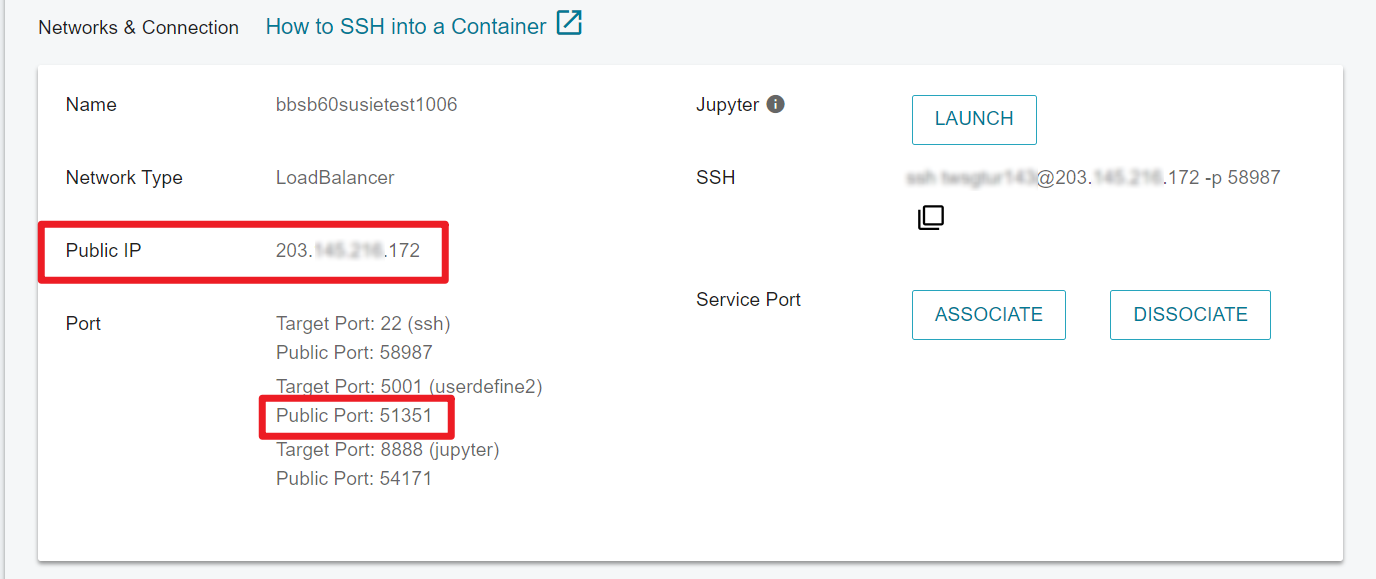

- Open the web browser and enter

Container IP: Public Portin the address bar (as shown below on the Interactive Container Details page), you can starts to use the AI inference service.

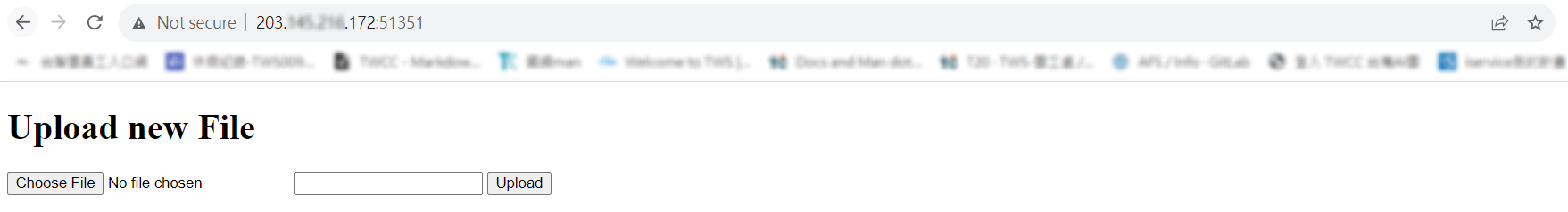

- Click Select File to select the image file which content is to be recognized, and click Upload to upload the image.

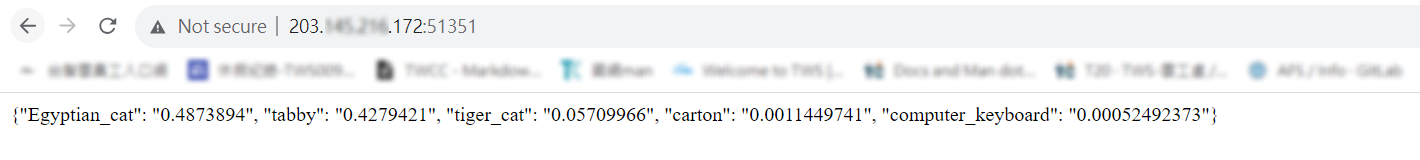

- Using Cat pictures as a test example, the result of image recognition and the similarity value are displayed in the browser, which is the most similar to Egyptian_cat (0.4873894).

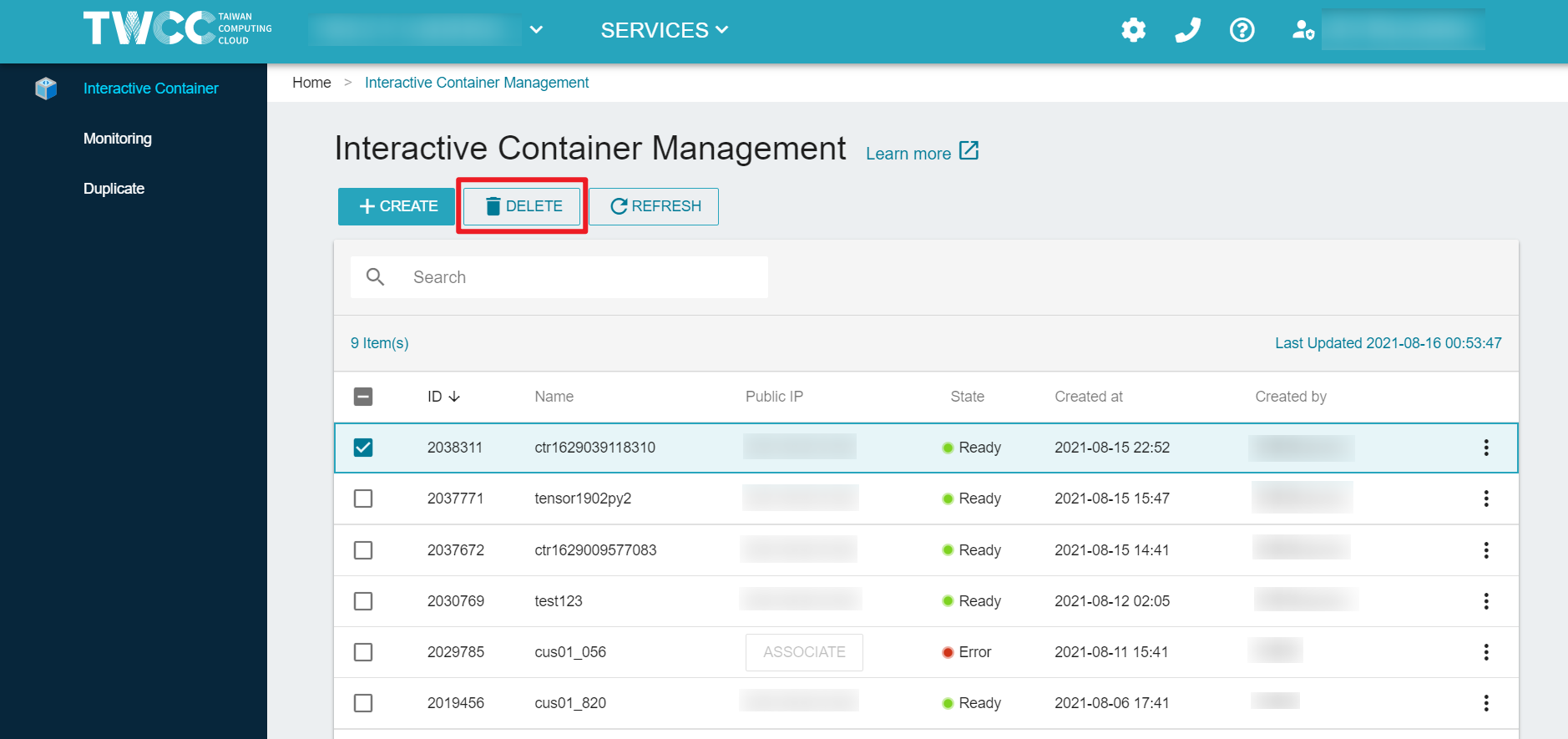

Step 4. Delete the container and recycle resources

After the container is created, it will continue to be billed. If you no longer need to perform training and inference, you can select the container from the TWCC Interactive Container Management page, click Delete, and recycle resources and stop the billing.