模型建立

本文件中文版尚在籌備中,敬請期待。

4. Model Building

General Flow

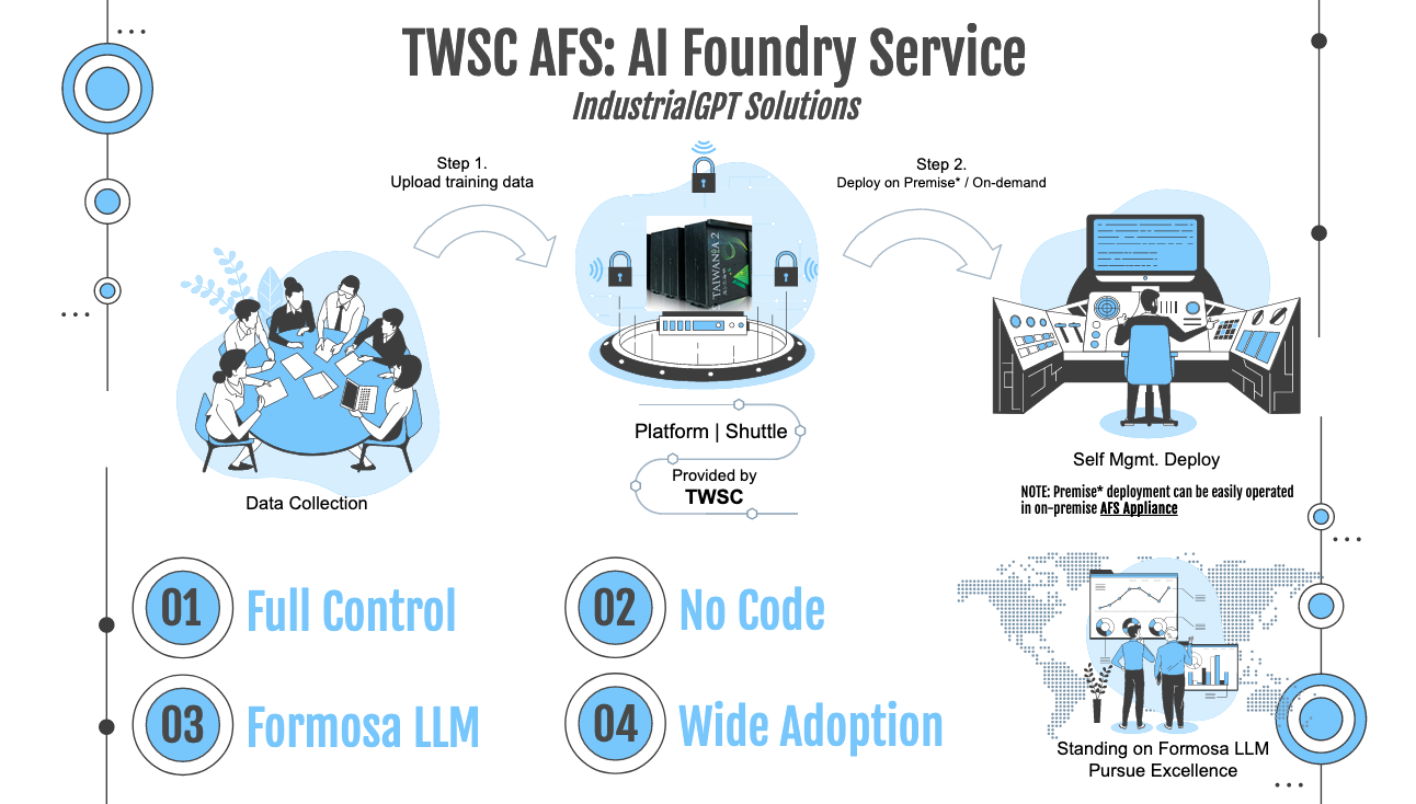

The AFS Service aims to reduce the effort required for training an LLM. As depicted in the provided flow diagram, your task involves gathering necessary data tailored to your applications and requirements. Afterwards, you can upload and enroll this data in AFS Dataset Management. Following this step, you can initiate your training task within the AFS Platform. This platform will automatically select an appropriate training recipe and allocate dedicated high-performance computing (HPC) resources to effectively train your LLM model. Upon the completion of the training job, you are presented with the opportunity to set up an AFS Cloud inference service. This service allows you to engage with your Language Model through either a chat-based interface or an API connection.

The AFS Service has been meticulously crafted to simplify the process of training a Language Model (LLM) by reducing the level of effort you need to put in. Let's break down the steps:

- Data Collection: The first step is to gather the necessary data that aligns with your specific applications and requirements. This data will be the foundation upon which your LLM model will be trained. Think of it as the building blocks that shape the capabilities of your model.

- Upload and Register: Once you have collected the essential data, you can proceed to upload and register it on the AFS Dataset Management page. This system acts as a storage hub for your data, performing tasks such as token calculation and format normalization during preprocessing. This ensures that your data is well-organized, easily accessible, and prepared for the training process. Registration in this context is akin to establishing a designated gateway for your data within the system.

- Dispatching Training Jobs: With your data securely stored in the AFS Dataset Management, you can now initiate the process of training your LLM model. This is done by dispatching a training job within the AFS Platform. Think of this as giving the command to start the learning process for your model.

- Automatic Recipe Selection: Here's where the magic happens. The AFS Platform, utilizing advanced algorithms, automatically selects a suitable training recipe for your specific data and goals. A training recipe is like a set of instructions and parameters that guide the learning process. The platform ensures that the recipe chosen is a perfect match for your data characteristics.

- HPC Resource Allocation: Training a sophisticated model like an LLM requires significant computational power. The AFS Platform takes care of this aspect by exclusively allocating High-Performance Computing (HPC) resources to your training job. Think of HPC resources as supercharged engines that drive your model's learning at an accelerated pace.

- Model Preservation: Maintaining versions of Language Models (LLMs) within a storage system guarantees the retention of all training assets. This approach supports reproducibility, auditing, and the option to revert to prior iterations when necessary. Well-preserved LLM models that are appropriately archived are shielded from data loss or model deterioration. This protection allows for the deployment of thoroughly tested models in both on-cloud and on-premise production environments.

- Designate Inference: In AFS Cloud, we utilize proprietary HPC technology to operate your dedicated model within individual, isolated multi-tenant cloud service platform architectures, all in compliance with cybersecurity regulations. Not only can you securely access the inference service of your dedicated model for rapid LLM availability validation, but you can also strategize deploying this well-trained dedicated model into on-premise AFS Appliance services, enabling deep integration with enterprise systems.

In essence, the AFS Service streamlines the journey of training an LLM. It simplifies the process to a series of steps: collecting data, organizing it within the AFS Dataset Management, triggering training within the AFS Platform, and then witnessing the platform intelligently choose the right recipe and provide the necessary computing resources. This orchestration of steps ensures that your LLM model gets the best possible start in its learning journey.

For more details, please visit AFS Dataset Management, AFS Platform and AFS Cloud for more details.